Does Rate My Professor Deserve a Perfect Rating?

Type in most professors’ names plus Amherst College into Google, and within the first page of results, you’ll see their Rate My Professor (RMP) page, where they receive a score from one to five, the average of each anonymous student rating. You might sigh in relief, finding that your professor for next semester’s class has a perfect score of 5.0. Or you might groan, seeing an abysmal score of 1.0.

You might also notice how few ratings there are — most professors at Amherst do not receive more than 10 in total. How much can you trust that score? Behind each anonymous rating, there may be a student seriously evaluating or one simply ranting about a low grade. Furthermore how do you know that these are actually from students?

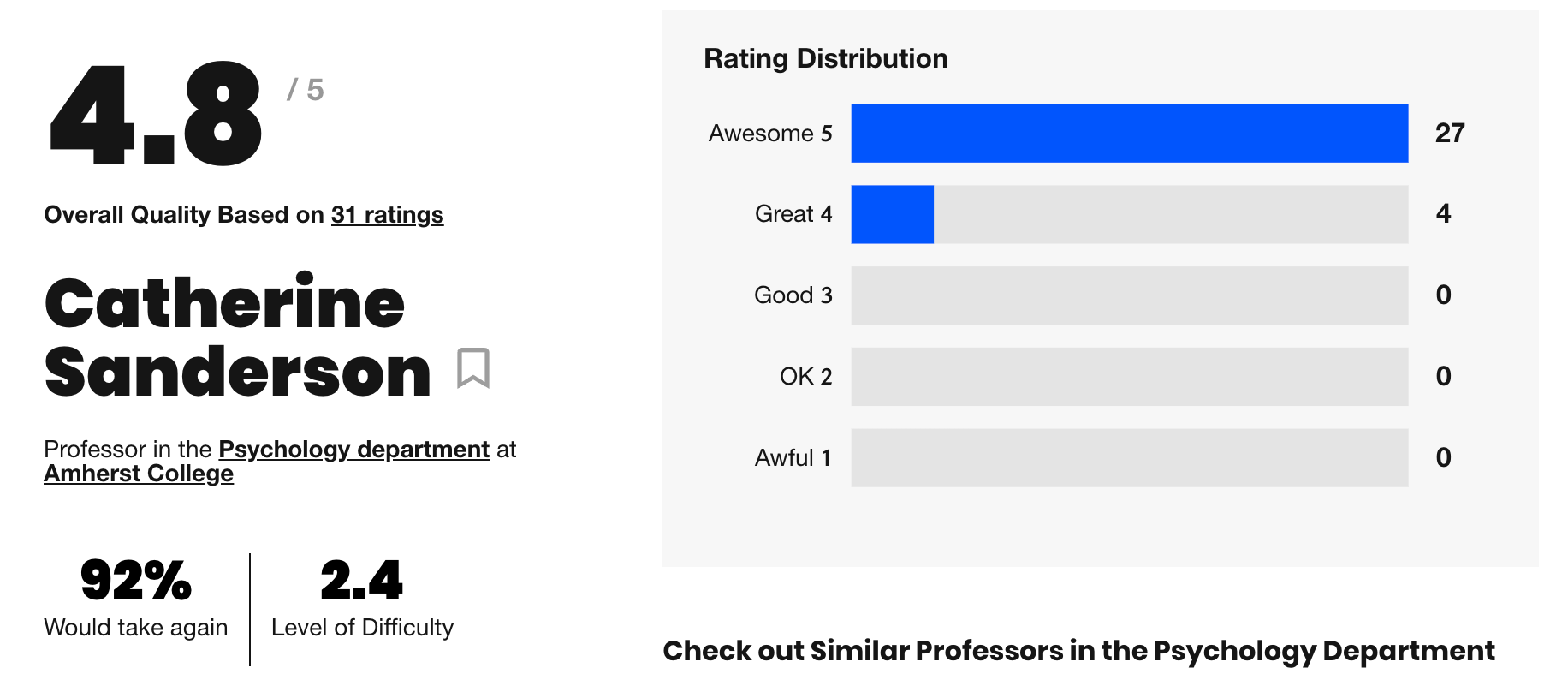

To Catherine Sanderson, Poler Family Professor of Psychology, her RMP score of 4.8 is not conclusive at all.

In 2011, Sanderson received an email saying that she “has been chosen as one of America’s best professors,” which she thought was a scam. She would later find out, after being contacted by the admissions office, that she was to be featured in a Princeton Review book titled “Top 300 Professors of America.” The selection of professors was based entirely on their RMP scores.

“I thought it was a dubious research method, and that’s why I was like, ‘That can’t literally be how they’re choosing,’” Sanderson said. “At the time, I’[d] been here for maybe 10 years and taught 200 students a year, and there were like 17 people that had rated me. They rated me well, but it was 17 out of 2,000 students.”

Up until now, Sanderson has taught over 5,000 students at Amherst and received only 31 ratings on RMP. She sees RMP scores as highly inaccurate not only because of the very limited number of ratings, but also the anonymity and the lack of assessment compared to course evaluations, which ask for more nuanced feedback specifically from students enrolled in her classes.

“Were those 31 people even in my class? I’m not sure. All those could be me,” Sanderson said. “I will also say that people are much more honest when they have to sign their name and own their response. When Amherst does evaluations that we consider for tenure, the students have to sign their name.”

Ivan Contreras, associate professor of mathematics, sees RMP as “problematic” because the website is “totally unmodulated and unregulated.” He finds it very similar to social media conversations where information can easily be distorted.

“[It’s like] information that you get from Facebook or Twitter,” Contreras said. “It’s not clear what the moderation process is, it’s not clear if the people writing those ratings are part of the institution. There are so many unknowns about the process. What if a person is writing the same ratings again and again and again?”

Indeed, in 2020, James E. Ostendarp Professor of Psychology Sarah Turgeon faced a problem similar to the one Contreras outlined. Turgeon recalled that a student who was upset with her gathered many people who had not taken her class to post low ratings and negative comments on RMP.

“I know because they didn’t have the course number correct, they were all submitted at the same time and said essentially the same thing,” Turgeon said. Although anyone can report comments, RMP ultimately decides whether they are taken down or not.

While she called the ratings “ethically problematic,” she said, “As long as they aren’t used in any official capacity by administrations, which I trust they are not, I don’t see them as a big deal.” Students, however, continue to see a 2.6 for Turgeon on RMP, highlighted in red.

For Ted Melillo, William R. Kenan, Jr. professor of history and environmental studies, his 4.9 score on RMP — which he discovered during our interview — was a pleasant surprise. As he scrolled through nine pages of ratings dating back to 2011, he found the students’ comments “very intriguing.”

“I’m glad they thought my lectures were interesting,” he said, reading reviews aloud, “‘Bit lower than I’d hoped for on the grades’ — Well, that’s always the case at Amherst.”

Melillo, with 39 ratings, is Amherst’s most-rated professor on RMP. This is still a relatively small number in comparison to, for example, RMP at the University of Massachusetts, Amherst, where many professors have more than 100 ratings. Out of the few ratings Amherst professors usually receive, very few were posted within the past year. In addition, some professors have no ratings at all or do not even have an RMP profile, which are created by students.

Sanderson sees Amherst students’ low activity on RMP as a result of a smaller campus and higher average quality of faculty.

“On a small campus, word travels fast about professors, and you can easily ask a friend, a roommate, or a teammate,” Sanderson said. “At Amherst, if you’re a great researcher but not a good teacher, you don’t get tenure. There’s no sort of panic to avoid a really bad professor because we have a mechanism in place that we don’t hire those people.”

Many students also agreed that talking to friends, especially upperclassmen, is more helpful than using RMP. Alex Coiov ’28 recalled having referenced RMP over the summer while choosing courses for his first semester, but said he will not consider using it again for spring course selections.

“The platform certainly has its uses, as it can help incoming first-year students get a sense of what to expect from certain professors and courses,” Coiov said. “However, the sheer lack of consistent information makes the platform outdated and useless.”

Students also use Fizz and GroupMe to learn more about professors. Most posts soliciting advice on professors receive replies.

Regardless, RMP remains a readily available site and perhaps a last resort. How should we make sense of RMP ratings, then? Contreras offered a simple advice: just take it with a grain of salt.

“If I were a student with access to this type of information online, and being aware of how this is done — we don’t even know who takes profit, it’s nothing institutional,” Contreras said. “So that’s why [we should] reflect more on the information that we gather.”