Amherst Bytes: Moore v Koomey: The New Law of the Land

Anyone who has purchased a personal computer in the last decade knows that computers tend to grow more powerful at an amazing rate. Buy a MacBook, wait 12 months and the next model runs at what seems to be twice the speed as your old one. People complain that we all just bought a new machine, wonder if anyone needs this level of speed and ask when the hell that Steve Jobs guy is going to stop telling me I need new gizmos and gadgets and whatchmacallits.

This conspiratorial trend, however, is nothing more than a simple law of progress in computing design. In 1965, Electronics Magazine published an article in which Gordon E. Moore observed that the increases in integrated circuits’ transistor counts had been accelerating. A decade after the original article and seven years after he had co-founded Intel, Moore revised his thesis to specify that such counts doubled every two years. With the increasing performance of transistors factored in, a colleague at Intel then concluded that integrated circuits themselves doubled in performance every 18 months.

In plain terms, computers double in speed every 18 to 24 months. Frequencies may stay the same, so you won’t notice the number of gigahertz increase, but new processor architectures and more cores mean our computers keep on getting faster and faster. There is a theoretical limit to Moore’s Law — a moment beyond which computing progress slows — but we have yet to catch sight of it. In Moore’s own words, “Moore’s Law is a violation of Murphy’s Law. Everything gets better and better.”

But Moore’s axiom may become obsolete before it expires. It’s becoming more and more apparent that computing power eclipses the needs of most consumers these days. MacBook Airs and iPhones can accomplish most of our routine tasks without working up a sweat. The real power in modern computing is confined to server farms, stashed miles away from users. It might be time to admit that most of us just don’t need that much more power.

Think about the most frequent problem with your computer or cellphone. Sure, you have the occasional virus, perhaps a persistent software bug, and maybe even the rare hardware failure. But every day, we’re confronted with the incontrovertible fact that we live by the batteries. Real-world computer benchmarks would be measured in minutes of battery life, not gigahertz or gigaflops or how many seconds a file transfer takes.

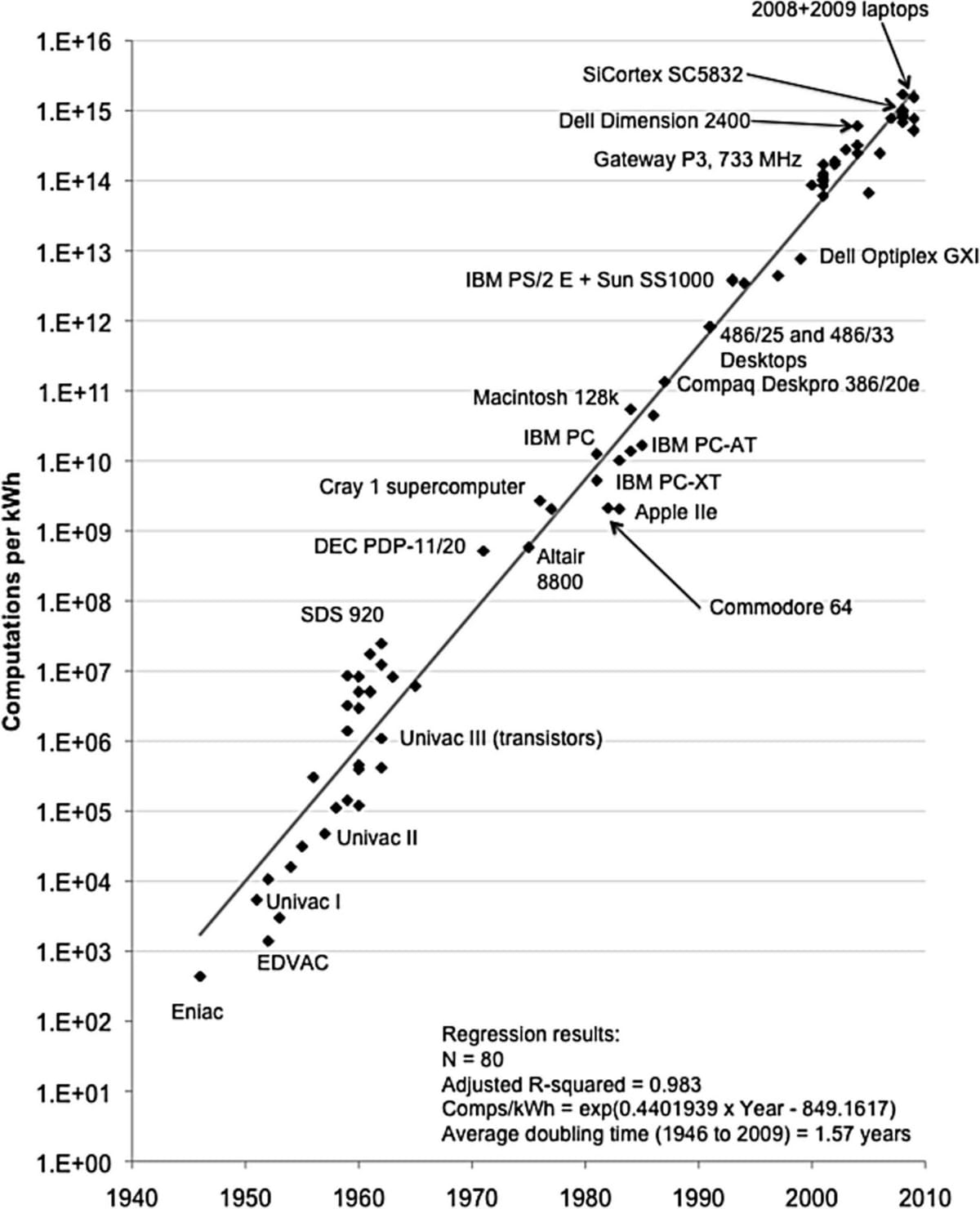

Enter Dr. Jon Koomey, the lead author of an article in the Institute of Electrical and Electronics Engineers’ Annals of the History of Computing. Koomey and his colleagues describe a long-term trend similar to that law first put forth by Gordon Moore in 1965. In view of increasing energy efficiency in computing hardware, Koomey concludes, “a fixed computing load, the amount of battery you need will fall by a factor of two every year and a half.” For the past four decades, the data presented shows a steep relation between the passage of time and the amount of computations per kilowatt-hour.

The discovery itself doesn’t change the technology, but it names and identifies a trend that was central to the development of the market. As processors improve and tech vendors offer more mid-powered models tailored to the needs of consumers, the defining axiom for the digital marketplace is going to be Koomey’s Law. Moore’s axiom is alive and well, but its relevance to everyday consumers is set to decrease. Today’s computing landscape shows a hastening shift towards mobile devices and a shrinking space for consumer desktop computing. With each new processor release, mainstream vendors and consumers will place increasing value in processor energy efficiency and eschew discussions of gigahertz and gigaflops.

The trend has already begun. Small, efficient, system-on-a-chip platforms from Samsung, ARM and Qualcomm have stormed to the forefront of the marketplace. The question of which original equipment manufacturer will supply the components for the iPhone 5/iPad 3 has held the attention of industry speculation for the last six months. Moore’s Intel still maintains a dominant grip on the traditional market, churning out energy-efficient notebook/desktop CPUs and earning it the nickname “Chipzilla,” but its attempts to penetrate into mobile space have been unimpressive. The recent obsolescence of Moore’s Law might just prove to be an appropriate prediction for the fate of Moore’s other great accomplishment within the next decade.

But time will tell. The cloud still needs servers, and servers still need big, hungry, powerful processors. Moore’s Law might lose some of its charm but it will lose none of its truth for the foreseeable future. For now, Koomey’s Law is the golden child of computing progress, offering vital and consistent growth in energy efficiency. As both laws hold true, and our devices grow faster and more efficient, we can expect a third function to continue its meteoric growth: the inevitable and increasing omnipresence of these devices in our everyday lives.

Comments ()